We submitted this today. OpenAI’s founders include Elon Musk and it’s CEO is Sam Altman. GPT-3 is OpenAI’s hugely powerful (and hyped) NLP language model. If you don’t know what that means, think of it as an algorithm that can generate extremely human-like original text. GPT-2 was impressive in terms of its evolution from earlier text generators, and GPT-3 is an even bigger step forward.

A metaphor: consider someone wanting to learn tennis. The best way to learn is to practice with a skilled partner. But partners can be hard to find: maybe you’re in an area where there are few tennis players. Maybe you’re only available at hours when other players are asleep. What can you do to keep learning? You hit the ball against the wall. Sure, it’s not as instructive as a live player, but you can still improve. The wall is a feedback mechanism, like learning from reading a book or practicing with flash cards. If you have better access to technology, you can use a ball machine that serves shots to you. A ball machine can be adjusted to emulate different skill, variety, speed. The ball machine makes the tennis coach’s job more scalable.

We want to build the ball machine of experiential learning. Our goal has been to develop an agent that can assist workshops, the thesis being that collective intelligence of people in a session aided by artificial intelligence could be incredibly effective for training, learning, and problem solving.

The AI agent would play a key role in the learning process. Effective learning happens experientially through feedback with a skilled partner (plenty of research to back this up, btw). Person-to-person feedback-heavy learning doesn’t scale, however. There are not enough mentors and peers around to support this style of education.

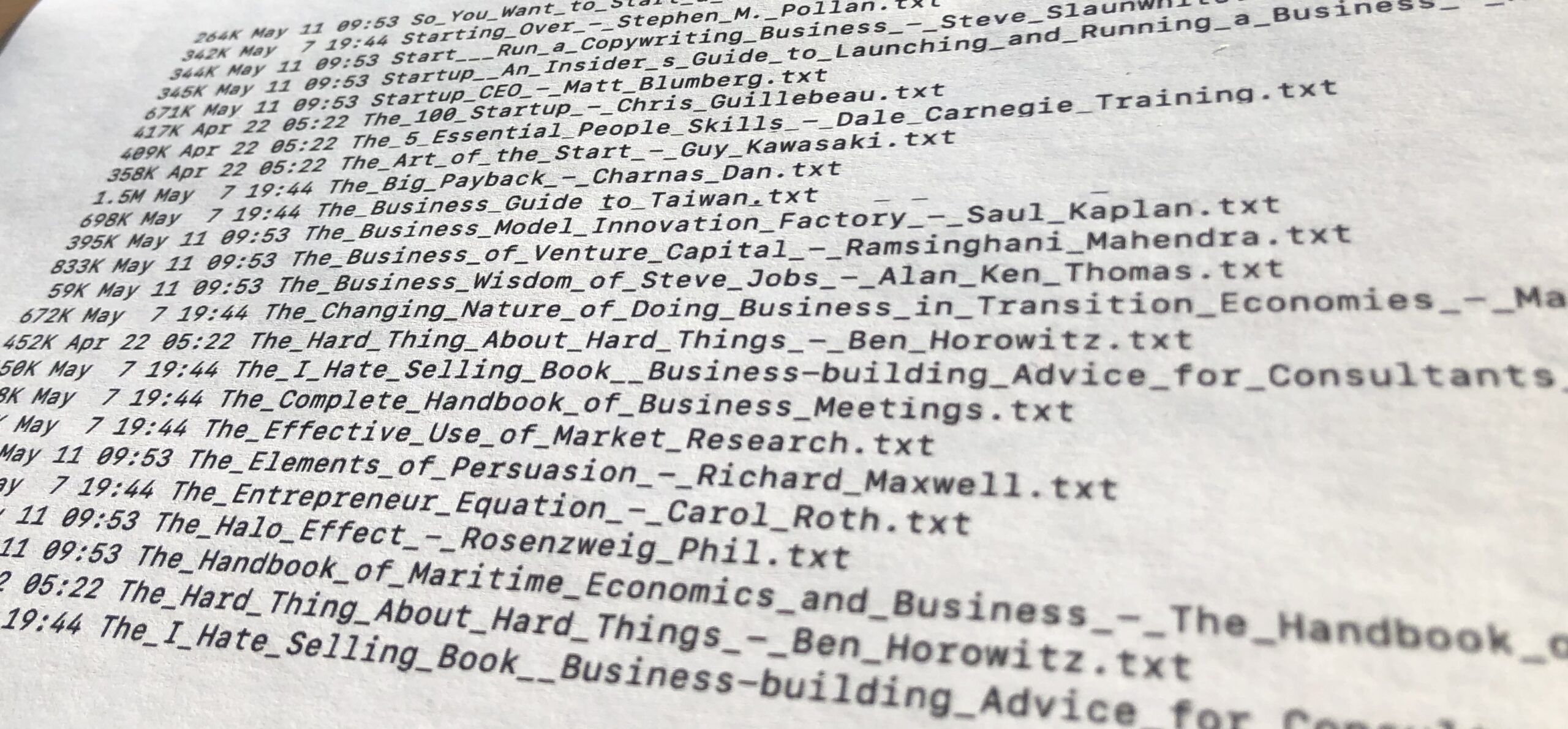

We have fine-tuned GPT-2 and other models on interesting datasets: business books, Paul Graham essays, etc. We’ve spent the last 18 months fine-tuning, developing prototype agents, and conducting in-person sessions and workshops (over 50 sessions collecting over 5,500 queries) to understand how non-technical users interact with assistance from AI models.

Some of the insights so far:

- Confirmation bias holds heavy sway (engagement increases when models produce results the users agree with)

- Users struggle with grasping that the model has little concept of truth. As soon as the model outputs an inaccurate statement (e.g., “Barack Obama was an MLB player for the Baltimore Orioles”), user sentiment shifts into a different mode

- Buddhist koan-like, cryptic outputs trigger deeper engagement and thought. It’s pretty thrilling to watch users’ faces when the try to discern it the model’s output was brilliant, inane, or both

We acknowledge the common risks associated with generating text from language models, including generating incorrect and misleading results. However, we feel that using an AI agent in the context of a workshop training multiple people, there are many opportunities for students to receive corrective instruction. This instruction can come from our teacher(s), peers in the workshop, or even the model itself. We expect these risks to be minimal compared to releasing the GPT-3 API publicly on the internet.